Sitting in the cavernous and nearly deserted Dallas Convention Center on a cool November evening, a team of four Berkeley Lab computer scientists anxiously awaited their chance to try to overwhelm one of the biggest communications networks in the nation. The team was going to demonstrate Visapult, a prototype application and framework for performing remote and distributed visualization of scientific data. Problems with network equipment kept delaying their start time, pushing it later and later into the night.

|

|

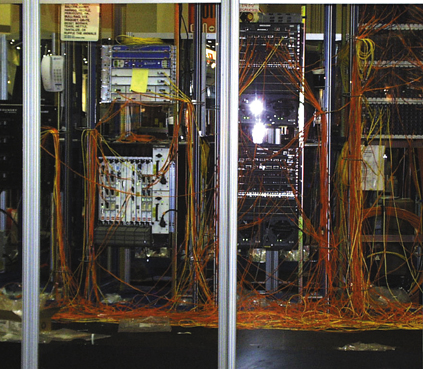

| A Berkeley Lab team jammed more than 2 terabits of data in 60 minutes through Scinet, the network assembled to support the SC2000 high-performance computing conference. | |

The setting was SC2000, the annual conference of high performance computing and networking. The network was SCinet, a network specially built for the conference and boasting a combined capacity more than 167,000 times faster than a typical residential Internet connection and 200 times as fast as the connections used by most universities. The event was the SC2000 Network Challenge for Bandwidth-Intensive Applications, a new competition in which the unstated goal was to break the network.

Even when the network problems were resolved and the team was ready to go, there were lots of unknowns. The team's application, called Visapult, hadn't been tested on such a wide scale. They were also relying on equipment at dispersed sites all coming together at the right time to take a shot at two high-water marks in the world of high performance computing and networking:

- Remote and interactive visualization of a 1 terabyte dataset, and

- Sustained transfer rates exceeding 1.5 gigabits per second.

As they waited for their chance, team leader Wes Bethel likened their situation to "running uphill against the wind." But if they succeeded, the team would saturate the available bandwidth and access a terabyte of data in one hour-a task that would require 24 hours using a fast Ethernet connection. Besides Bethel, team members included Dan Gunter, Jason Lee and Brian Tierney, all of Berkeley Lab. Although the team finally got on the network late that evening, the official results wouldn't be announced for two more days.

|

|

|

| Wes Bethel |

But the team could wait. After all, their demonstration was really the culmination of a decade of extensive research at Berkeley Lab into developing reliable means of moving large amounts of data quickly across the Internet. For the U.S. Department of Energy and other national research institutions, such a capability is key to the future of scientific research as more and more large-scale experiments around the world generate massive amounts of information.

Often, the essential data is stored along with mountains of related information at various research sites. Pulling together the relevant data from different sources across different networks, then reassembling it quickly and seamlessly is essential to finding the science in the data.

First demonstrated at the SC99 conference, Visapult builds upon research into high-speed, wide-area data intensive computing at Berkeley Lab going back to 1989. Initial efforts involved demonstrating the feasibility and necessity of using remote data. One early pilot project allowed doctors at hospitals scattered around Northern California to remotely view medical images stored in a central archive.

|

|

|

| Jason Lee |

In 1993, with funding from DOE and DARPA, Berkeley Lab scientists began working on the MAGIC Gigabit Testbed project. This collaboration was a heterogeneous collection of networking equipment, computers, networking protocols and services which were brought together to operate as a single application at high speed. One aspect of this project was the Distributed Parallel Storage System (DPSS) developed at Berkeley Lab. By caching the data on multiple disks on multiple workstations, the DPSS could manage the data as many parallel blocks as opposed to a single large block, the team of Berkeley scientists led by Brian Tierney were able to achieve very high throughputs using low-cost commodity computing hardware.

"The real problem was in delivering high rates of data to the network," said Bill Johnston, head of the Lab's Distributed Computing Department and an expert in the field. "We found that if we broke the system of servers and networks down into parallel components, we could deliver the data in parallel pieces. It turned out that the operating systems we used were optimized to work better with these parallel streams."

|

|

|

| Dan Gunter |

The first demonstration of the MAGIC Testbed involved TerraVision, a terrain visualization application that uses DPSS to allow users to explore a "real" landscape presented in 3-D. To produce the images, TerraVision requests the necessary sub-images from DPSS. Typically, this application requires aggregate data rates of up to 200 megabits per second. DPSS could easily provide this data rate from several disk servers distributed across the network.

DPSS subsequently played a key role in a test to see how quickly data from high-energy physics could be moved from an experimental site (in this case, the Stanford Linear Accelerator Center) to a scientific computing site (NERSC at Berkeley Lab) for analysis. The demonstration delivered 560 megabits per second, showing that it was possible to move a terabyte of data in one day. This project served as a prototype test of the STAR analysis system for analyzing experimental data, such as that being generated by the Relativistic Heavy Ion Collider at Brookhaven National Laboratory.

The demonstration at SC2000 showed that the technology is in place to routinely deliver gigabit data streams. For scientists interested in using visualization applications to explore data, this capability will make it easier to pull together research findings from various sites and improve scientific collaborations. In fact, Visapult was developed as part of a DOE project to enable the scientific visualization of combustion-related research at various national laboratories and universities.

|

|

|

| Brian Tierney |

To run their entry at the conference in Dallas, the Visapult team used the resources of several DOE organizations: disk servers at Berkeley Lab running DPSS, client machines on the SC2000 show floor running Visapult for remotely visualizing an 80 gigabit data set running on an eight-processor SGI Origin computer in the DOE ACSI booth, and dpss_get, a high-speed parallel file transfer application running on an eight-node Linux cluster in Argonne National Lab's booth at the conference. When it mattered, it all came together.

When the results were announced during the conference awards session, the Berkeley Lab team was awarded the "Fastest and Fattest" prize for overall best performance. The Visapult team recorded a peak performance level of 1.48 gigabits per second over a five-second period. Their 60-minute average throughput was 582 megabits per second. Overall, the team transferred more than 2 terabits of data in 60 minutes from Berkeley Lab to the SC2000 show floor in Dallas.

"Science is increasingly dependent on networking, with the people, data and facilities dispersed around the country and the globe and this was an exciting chance to demonstrate what leading applications can do when they have access to advanced networks and services," said Mary Anne Scott, a program manager in the DOE Office of Science, and one of the judges of the SC2000 competition.

The ability to couple DOE research instruments and facilities, such as accelerators, light sources, electron microscopes and telescopes, with large-scale computing and data storage facilities are expected to produce new scientific breakthroughs.

"This vision of cooperative operation of scientific experiments and computational simulation of theoretical models is one of the ultimate goals for our work in data intensive computing," Johnston said. "With each success, such as the Visapult demonstration in Dallas, we're closer to making that vision a reality." - end -

|

|||||||||