|

|

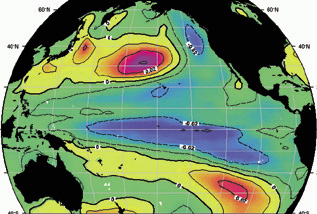

The similarity of this simulation to actual observed climate conditions shows that the model is able to faithfully capture large-scale climate patterns. |

|

|

For many organizations, a 25th anniversary is a time for looking back at past achievements. Not so for the National Energy Research Scientific Computing Center (NERSC), which observes its silver anniversary this year by charting a future course aimed at keeping it on the forefront of computational science.

A U.S. Department of Energy national user facility located at Berkeley Lab, NERSC was one of the very first unclassified supercomputing centers established in the United States. After its humble beginnings with a cast-off computer, NERSC has consistently pushed the development of its capabilities in order to provide its users with the most powerful scientific computing resources available.

Its latest acquisition, called NERSC3, will be an IBM RS/6000 system consisting of 2,048 processors dedicated to large-scale scientific computing. The new system will have a peak performance capability of more than 3 teraflops (3 trillion calculations per second), which is more than 400 percent more powerful than NERSC's current computing powerhouse, a Cray T3E-900, and 12 million times more powerful than the Center's first computer. Installation of the IBM system began in June and will be phased, with completion by December 2000.

"It's all well and good to talk about peak speeds and numbers of processors, but the real benchmark of a high-performance computer is the quality of scientific results produced by the users," said NERSC Division Director Horst Simon. "For example, NERSC and scientists from Oak Ridge National Laboratory, the Pittsburgh Supercomputing Center and the University of Bristol were partners in winning the 1998 Gordon Bell Prize for the best overall performance of a supercomputing application.

"The key here is the application," Simon said. "The scientists involved were able to scale up their application, which is part of DOE's Materials, Methods, Microstructure and Magnetism Grand Challenge, to run on successively larger computers-and yield successively more accurate simulations of metallic magnets."

The result was that the application was the first documented scientific application to run at more than 1 teraflop and produce significant scientific results. (See sidebar.)

The ability to scale up applications to run on these larger, massively parallel machines holds promise for dramatic breakthroughs in computational science in areas that are currently under study by NERSC and other Department of Energy researchers.

Modeling Global Climate Change

The effects of human activities on our world's climate have been the subject of intense discussion and debate. But before policy makers make decisions which could affect our quality of life and standard of living, they need to have the best available information. Simulations of global climate change which span decades can provide such data, but current computing resources are capable of providing only a fraction of the data needed, not the whole picture. For instance, running a comprehensive climate model incorporating one year of data takes about 35 minutes using 256 processors on NERSC's Cray T3E. Although this is 28 times faster than the previous machine, it still results in a model that lacks regional detail, and it can only account for one year.

To have confidence in their results, scientists need to be able to simulate a century's worth of climate data. Then they need to change a few of the variables and run the data again. This ability to create an ensemble of simulations, then choose the one which is closest to actual known conditions, is critical to creating accurate computer models which can be confidently used as the basis for government policies.

The Burning Issues of Combustion

|

|

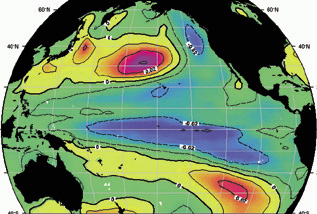

3-D simulations of turbulence such as this one are helping scientists tackle complicated problems of combustion. |

|

|

Directly related to discussion of climate change is the issue of combustion, whether it occurs in power plant boilers or automobile engines. Gaining a better understanding of combustion can help improve efficiency, reducing both the amount of fuel which goes in and the amount of emissions which come out.

Solving the mysteries of combustion through numerical simulations requires a multidisciplinary approach that involves specialists in fields such as computational fluid dynamics and chemical kinetics. Although the interiors of internal combustion engines and power plant boilers are hot, dirty and dangerous environments for conducting experiments, the problem at hand is well suited to computer simulation.

With accurate combustion modeling, scientists can study the entire process and make incremental changes in the model system to test new ideas, a cost-effective alternative to building and modifying actual engine components for testing. As more powerful computers become available, scientists will be able to more accurately model the complex mixing of fuel and oxygen, and the burning of the mixture as the flame front moves through the cylinder. In addition, the technical knowledge accrued in this program will have implications for other

combustion devices and other disciplines, such as aerodynamics and atmospheric simulation.

NERSC is currently used by more than 2,500 researchers at national laboratories, universities and industry. In addition to climate and combustion, areas of computational science include fusion energy, computational biology, materials science, high-energy and nuclear physics, and environmental modeling.

Just as the range of research has expanded, so too has the range of resources offered to users.

Through the years, NERSC helped pioneer many of the computing practices taken for granted today. These include remote access by thousands of users, high-performance data storage and retrieval, on-line documentation, and around-the-clock support for users. One area that remains constant is that there is more demand for computer time than can be allocated.

The center was originally established in 1974 to provide computing resources to the national magnetic fusion research community. Over the years, its scope was broadened to support other DOE programs. In 1996, NERSC was reinvented at Berkeley Lab with the goal of providing both computing and intellectual resources to improve the quality of science coming out of the facility.

"NERSC has long been a model for other institutions wishing to establish supercomputing centers," said William McCurdy, who co-founded the Ohio Supercomputer Center in 1988 and became director of NERSC in 1991. McCurdy, a physical chemist who today heads the Computing Sciences Directorate at Berkeley Lab, adds, "The story of NERSC is really that of modern computational science in the United States. From pioneering large-scale simulations to developing the early time-sharing systems for supercomputers, much of the action has always been in this center."

Lab Researcher Achieves Supercomputing Milestone-

Garners Gordon Bell Prize

After checking into his hotel room in Orlando, Fla., to attend the Supercomputing 98 conference last November, Andrew Canning did what many scientists do. He hooked up his laptop and logged onto a remote computer. But he wasn't checking email. He was achieving a supercomputing milestone.

Canning, a physicist and member of NERSC's Scientific Computing Group, was working with researchers at Oak Ridge National Laboratory and other facilities on DOE's Materials, Methods, Microstructure and Magnetism Grand Challenge. As part of its work, the group developed a computer code to provide a better microscopic understanding of metallic magnetism, which has applications in fields ranging from computer data storage to power generation.

The scientists gained access to several Cray supercomputers, each more powerful than the previous one, to run their application. With each step, the scientists expanded the size of their model

and ran it more efficiently. From his hotel room, Canning was able to log on to a machine still in the Cray assembly facility and ran the code on 1,480 processors at a speed of 1.02 teraflops-more than one trillion calculations per second.

This effort wass a significant milestone because it both developed the theory that makes such simulations possible, and reached the scale of more than 1,000 atoms for a first-principles simulation of metallic magnetism.

To get these results, Canning spent more than 14 hours online from his Orlando hotel. Three days later, he and his colleagues were named as winners of the 1998 Gordon Bell prize for the fastest scientific computing application. The next day, Canning received another awe-inspiring notification for his efforts-an in-room hotel bill of several hundred dollars in long-distance phone charges. end

|