Silver

Anniversary for the TPC

LDRD Projects: An Ongoing Success Saga

Silver Anniversary for the TPC

BY LYNN YARRIS

|

|

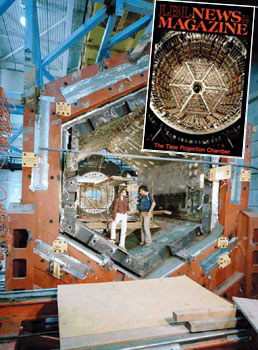

| The original Time Projection Chamber (TPC), shown with its inventor David Nygren (left) in 1981. | |

“The day the TPC project was approved I felt a mixture of elation and dread. I think I must have felt the way a novice mountain climber does — rope coiled on his shoulder, pitons in his knapsack — walking towards the face of El Capitan and looking up.”

That’s how Berkeley Lab physicist David Nygren described his feelings about the construction of the first Time Projection Chamber to science writer Judith Goldhaber in a 1982 interview. Four years earlier, in 1978, the Laboratory had undertaken the building of a revolutionary new machine for detecting and identifying the invisible subatomic particles created in the collisions that take place inside accelerators.

Nygren had first proposed this machine in 1974 and called it a Time Projection Chamber, or TPC, because particles would be identified on the basis of the time it took their signal to move through a gas. The first TPC construction was once described by Berkeley Lab physicist Jay Marx, who was the project manager at the time, as “the equivalent of going from the Spirit of St. Louis to a 747 jet.”

For all of the perils and pitfalls (including a fried magnet, a ruined calorimeter, budgetary problems, and delays), this first TPC was built and installed in the PEP electron-positron collider at the Stanford Linear Accele-rator Facility. Now, 25 years after that first construction began, TPCs can be found in all the world’s top accelerator facilities and have literally been at the heart of such crucial experiments as the discovery of the Z boson, the study of quark confinement into particle jets, and the creation of a quark-gluon plasma. Not only have TPCs become the workhorses of today’s high energy and nuclear physics research, they will be central to tomorrow’s experiments as well, including those at the Large Hadron Collider (LHC) and the TeV-Energy Superconducting Linear Accelerator (TESLA).

|

|

| David Nygren,

inventor of the Time Projection Chamber, speaks at the Berkeley Lab TPC Symposium. |

To commemorate the 25th anniversary of the TPC, Berkeley Lab hosted

a symposium on Oct. 17. More than 80 scientists from around the world

gathered here to review the experience gained over the past 25 years

in designing, building and operating TPCs.

“The broad view of the meeting from a large number of attendees

was that it was quite a success,” says Michael Ronan of the

Physics Division, who chaired the Berkeley Lab committee that organized

the symposium. “We covered many different applications of TPC

detectors in a number of different research areas, such as high-energy

particle and heavy-ion nuclear physics, searches for dark matter and

double beta decay, and neutrino measurements and special applications.

There were good discussions of TPC performance, operation and problem

solving. We also heard about new technical developments to enhance

the performance of future TPCs.”

In his welcoming remarks at the symposium, Lab Deputy Director Pier Oddone briefly talked about building the original TPC at Berkeley Lab.

“We were too young and enthusiastic to realize that this was such an enormously ambitious project that no one sane would try to do such a thing,” Oddone said. “We tried to innovate three different technologies and initially we failed at all three.”

The TPC

A TPC is a barrel-shaped detector whose chamber is filled with pressurized gas and a perfectly uniform electrical field. The barrel is fitted around a beamline so that the particles created in a collision fly out through its chamber. A powerful magnet surrounding the barrel curves the path of the particles passing through the chamber so that their momentum can be measured. As the particles pass through the chamber they collide with atoms of the gas, knocking off a string of electrons that respond to the electrical field and drift towards the end of the chamber. At the end of the TPC chamber is a pie-shaped array of wires and sophisticated electronics that can detect and sift through thousands of particles simultaneously and can clearly distinguish between similar types of particles.

|

|

| A Time Projection Chamber is like a 3-D camera that captures images of the flight of subatomic particles. The TPC is at the heart of the STAR detector, now at RHIC in Brookhaven. | |

As Nygren once explained, “TPCs allow us to reconstruct any track in three dimensions by determining how long the electrons took to drift through a given distance in the gas, which of the wires on the pie-shaped array picked them up, and what point along the wire they hit and were recorded. Because of its ability to visualize tracks in three dimensions, a TPC can sort out multiple particles with ease.”

Nygren has said the idea for the TPC came to him after he realized

that real improvements in particle detection could not be achieved

without a radical departure from the old ways. “Once I accepted

this, I made the vital step of re-examining assumptions, and rather

suddenly the idea of drifting particle track information parallel

to rather than perpendicular to the magnetic field sprang into my

mind.”

A quarter of a century later, Nygren’s radical new idea is a

distinguished member of the physics establishment and Nygren has been

recognized for his invention with numerous awards, including DOE’s

E.O. Lawrence Award and the American Physical Society’s W.H.K.

Panofsky Prize.

New TPCs are still being built, and the reason was perhaps best summarized

by former Physics Division director Robert Cahn, who once attributed

the longevity of TPCs to “the purity and power of Nygren’s

proposal.”

LDRD Projects: An Ongoing Success Saga

FY 2004 awards Announced

BY LYNN YARRIS

|

|

| Studies leading to the Keck Telescopes in Hawaii were made possible by director's funds at Berkeley Lab. | |

Do you know what is the common link between the use of supernovae

to measure the expansion of the universe, the creation of the first

high-temperature SQUIDs (Super-conducting Quantum Interference Devices),

the discovery of the genetic risk factors in heart disease, and the

development of a computer model that can visualize the flow and chemical

reactions of fluids through porous rock? All these projects were originally

funded through the Laboratory Directed Research and Development (LDRD)

program.

The LDRD program began funding projects in Fiscal Year 1992, but the

capability of the Laboratory Director to use a certain amount of overhead

funds has probably existed since the days of the Atomic Energy Commission.

For example, studies that eventually led to the Keck 10-Meter Telescopes,

the Advanced Light Source, and the B Factory all got their start through

director’s funds at this Laboratory.

According to Todd Hansen of the Lab’s Planning and Strategic Development Office who serves as the program administrator for LDRD, “The federal government has long recognized the need to support innovative research at the national labs. It’s understood that employee-suggested research and development projects are an important element in addressing the nation’s scientific needs and advancing the missions of the laboratory’s customers.”

The idea is that laboratory directors have a means by which they can help nurture a promising new scientific idea or proposal. Often, the director’s funds have been the first source of support for research at the forefront of a scientific discipline. Because director’s funding comes at an idea’s infancy, it may take several years before a success can be measured. But a number of projects have already proven their worth.

Saul Perlmutter, the Physics Division physicist who heads the Supernova Cosmology Project, received LDRD grants from 1996 to 1999 to use the supercomputers at NERSC to analyze the spectra and model different scenarios of stellar explosions. These results led to the astonishing discovery of an anti-gravity force, a cosmic “dark energy” that is accelerating the rate at which the universe is expanding. John Clarke, a physicist with the Materials Sciences Division and a pioneer in the development and application of ultrasensitive SQUID detectors, received LDRD grants from 1988 to 1990 to fabricate the world’s most sensitive magnetometer at liquid nitrogen temperatures. This magnetometer could record fluctuations in the beating of the human heart and was one of the first practical applications of high-temperature superconductors.

“Considering the relatively small amount of funding (less than three percent of the overall budget in FY 2003), Berkeley Lab’s LDRD program offers a high rate of return for a modest investment,” Hansen says. “It’s also a critical tool for attracting and retaining skilled and innovative scientists, and contributes to the Laboratory’s ongoing mission to educate future scientists.”

The LDRD process and 2004 awards

|

|

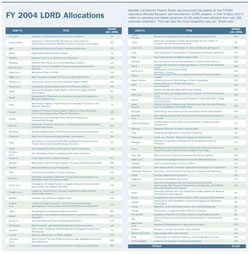

| [Click to view larger table]. |

The call for new LDRD proposals goes out in the spring of the preceding fiscal year in a letter from Laboratory Director Charles Shank. Principal investigators submit their proposals to their divisional office (or offices, if it is multi-investigator and multi-divisional initiative). Proposals are required to include a clearly-stated problem that addresses a DOE mission or national need, coherent objectives, and a well-considered plan for leadership, organization and budget. Following the initial submission, proposals undergo a series of reviews at the division and Laboratory level where they are ranked. Director Shank makes the final selection.

For FY 2004 Shank awarded a total of $13.3 million to 82 projects, which were selected from 168 proposals submitted. He called this the most competitive year yet for LDRD funding. In announcing the awards, Shank said: “It seems that each year the level increases for the scientific potential and creativity of LDRD proposals. This year had an extraordinary group of highly promising directions for the laboratory.”

Based on the results of projects funded by the director’s funds in the past, somewhere in the list of 2004 awards could be one of the next big things in science.

SHARES Kicks Off

|

|

Berkeley Lab’s annual charitable giving campaign returns next month with a new, simpler pledge process. The SHARES program, in which employees are encouraged to donate to their favorite nonprofit organization or cause, will for the first time be fully automated and electronic.

Beginning Monday and throughout the month of November, donors can commit contributions through a web-based format created by United Way, which will act as the disbursement agent for don-ations. Berkeley Lab’s donor site, which is fully secure, will allow employees to select charities, choose a method of payment — payroll deduction, check, or credit card — and disburse funds among the charities by percentage, all through a simple series of clicks on the computer.

Those who do not have e-mail accounts will have the option of filling out paper forms, which will be sent to them on request, by calling the Public Affairs Office at X5771.

The bulky, often confusing booklets of past years that accompanied the pledge packet have been transformed into easy-to-navigate links on the web. The entry page to the donation site lists several charitable options, including the United Way, Community Health Charities, the Bay Area Black United Fund, and Earth Share. Any 501C3 organization is eligible to receive funding.

SHARES, now entering its sixth campaign, stands for Science for Health, Assistance, Resources, Education and Services. The program supports Berkeley Lab’s commitment to reach out to surrounding communities and help those organizations whose success depends largely upon the donations of others — in particular during these times of economic challenge.

Read “Today at Berkeley Lab” for more details and instructions, or write to [email protected] with questions.

Car Sculpture Is A Fish Out of Water

|

|

A strange-looking creature resembling a large metal fish with four wheels was spotted at the Lab a couple weeks ago, parked near the pit. The “car sculpture” belongs to Mark Sippola, a postdoc in the Environ-mental Energy Technologies Division’s Indoor Environment Department. It is a continually evolving creation that he and a group of friends have worked on for the last few years.

“It started out as a 1972 Dodge Tioga camper, then was donated to an art class whose students began turning it into a fish,” says Sippola of the sculpture’s origins. “They were unable to finish it, so a friend of mine took it over, and we’ve been tinkering with it ever since.”

Using found and recycled materials, they welded together a metal frame, then tied on nearly 300 scales — made from scraps of sheet aluminum — using baling wire. It even includes pieces from his now-disassembled UC Berkeley Ph.D. ventilation system experiment.

“We acquired it with the idea of taking it to last year’s Burning Man celebration, but found out it was too tall to be street legal,” says Sippola. “So we lowered it and drove it 350 miles to the Nevada desert. Surpris-ingly, it handles pretty well.”

|

|

| Sippola |

The vehicle has been used as a traveling Karaoke machine, for tours of Napa Valley, and has made appearances at the last two “How Berkeley Can You Be” parades. There’s room in it to safely transport about 14 passengers. Right now it only gets about 10 miles to the gallon, but the group is looking into alternative fuel sources, Sippola says.

Normally, the vehicle is only brought out for special occasions. However, Sippola’s regular car was involved in an accident, so he was left without transportation for a few days.

“When I first pulled up to the entrance gate, the guard passed me through, then he did a double take as I drove past,” he said.

Despite its scaly facade, Sippola says the car isn’t waterproof, so it will stay under cover during the winter. But keep your eyes peeled come springtime — this fish may once again come for a swim at the Lab.

Short Takes

Bill’s Phase Transition

|

|

| McCurdy | |

Staff in the Computing Sciences program wanted to do something for Bill McCurdy to honor his almost eight years of service in advancing computational science at Berkeley Lab. But he wasn’t retiring, just stepping down from his associate laboratory director post to concentrate on his research work. So they’ve put together “Bill McCurdy’s Phase Transition, or Back to His Future.” The tribute will begin at 3 p.m. in the Building 50 auditorium on Tuesday, Nov. 4, and all employees are invited.

Help Desk Cures Ailing Computers

|

|

| Nat Stoddard assists a Help Desk customer | |

E-mail not working? Can’t log on to the server? Monitor’s pitch black? For those who rely on computers to do their work, glitches like these can turn a good day into a tangle of frustration. But Lab employees need never fear, because the Help Desk is near. Actually, the group — part of Information and Technology Services Division — is located in downtown Berkeley, but getting assistance from them is as easy as dialing “HELP” (X4357) or sending an e-mail to [email protected]. Standing by are four support engineers whose Herculean efforts keep the Lab’s 4,000 desktop computers up and running. They receive almost 3,000 calls each month, of which they fix nearly 70 percent on the spot, taking callers through the problem in about five to six minutes. Despite the volume (and the sometimes-panicked demeanor of their clients) the engineers are known for their patience and pleasant demeanor. “This is the best group ever,” wrote a customer in the Help Desk’s anonymous survey. “I seriously don’t know where we would be without them.”

Happy Birthday Dear EETD

The year was 1973. The Vietnam War was drawing to a close, the Watergate scandal was heating up, and bell bottoms and platform shoes were all the rage. It was also the year the Environmental Energy Techno-logies Division was established at the Lab in response to concerns about the cost, availability, and environmental effects of energy. Today, a major area of research is making residential and commercial buildings more energy efficient and maximizing the health and productivity of their occupants. To celebrate this mile-stone, the group held a gala event on Oct. 30, which brought together former and current employees. The cafeteria was transformed into a visual timeline for the division, with photos from the past and posters of recent accomplishments lining the dining hall. Speakers included former Lab director Andy Sessler, as well as division head Mark Levine, and former heads Jack Hollander, Bob Budnitz, and Elton Cairns.

Kudos for Lab‘s Help on K-12 Science Standards

Berkeley Lab’s Lynn Yarris, Rick Norman, and Rollie Otto recently received thank-you letters from Jack O’Connell, the State Super-intendent of Public Instruction, and Reed Hastings, the president of the California State Board of Education, recognizing their contribution to the Science Framework for California Public Schools. The publication of the “Science Framework” completed the process of developing standards-based frameworks for mathematics, reading/language arts, history/ social studies and science. Yarris, of the Communications Department, and Otto, head of the Center for Science and Engineering Education, served as editors and writers for the Science Framework; Norman, of the Nuclear Science Division, served on the Curriculum Framework and Criteria Committee and played a major role in the drafting of the physics section.

The Science Framework, which recommends curriculum for science education in California public schools, is based on standards adopted by the State Department of Education in 1998 and dedicated to Glenn Seaborg for his leadership in the development of these standards. Today all California high school science students and 5th grade students in public schools take year-end tests based on these standards. Instructional materials for grades K-8 must conform to the standards and other quality criteria set forth in the Frameworks.

Modeling a Cosmic Bomb

DOE

‘Big Splash’ Award Produces a NERSC First

BY PAUL PREUSS

|

|

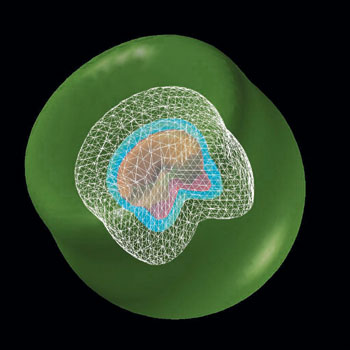

| An imaginative view of a binary star system going supernova: the companion star excavates a "pit" in the expanding cloud of matter. |

Earlier this year Lifan Wang of the Physics Division and his colleagues reported the first evidence of polarization in a “normal” Type Ia supernova (see Currents, Aug. 8). SN 2001el’s otherwise normal spectrum showed an unusual glitch, however, which has led to the best-ever supercomputer models of the shapes of these exploding stars.

The visible surface (photosphere) of a Type Ia explosion typically expands at over 22 million miles per hour. SN 2001el’s spectrum had extra calcium lines, so blueshifted they indicated material racing toward the observer twice as fast.

“One set of lines indicated velocity that was normal for a Type Ia photosphere, but the other showed very high velocity,” says Peter Nugent of the Computational Research Division (CRD). “Then Lifan showed us the spectropolarimetry. The high-velocity calcium line had a very different polarization than the supernova as a whole” — more strongly polarized and polarized at a different angle.

“This is a rare observation, a supernova in which components with different velocities are apparently oriented in different directions,” says Daniel Kasen, who works with Nugent building supernova simulations at the National Energy Research Scientific Computer Center, NERSC.

Existing simulations couldn’t explain the observation. “Very few people are doing such complex 3-D models,” says Nugent. “It’s a nasty task. It takes months just to run the codes.”

|

|

| Visualization of density distribution in a Type Ia supernova, developed by Cristina Siegrist and John Shalf from codes by Daniel Kasen. | |

The leading theory posits a Type Ia beginning as a white dwarf star accreting matter from an orbiting companion. The dwarf’s spin, the companion star, and the accretion disk all contribute to an asymmetric explosion.

Armed with a Department of Energy “Big Splash” award — supercomputer time for computationally intensive research — Nugent and Kasen modeled four geometries, each requiring some 20,000 processing hours on NERSC’s IBM SP, equivalent to 27 months on a single-processor machine. They generated synthetic spectra to see which most closely matched the observations. CRD’s Visualization Group made the results visible on the screen.

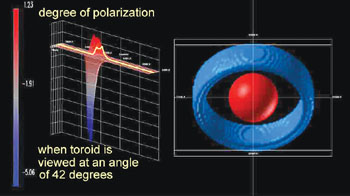

One model surrounded the photosphere with a spherical shell of high-velocity material. Another assumed the shell was ellipsoidal and rotated at an angle. A third showed a donut-shaped structure (toroid) around the photosphere. A fourth model assumed a spherical shell containing dense clumps.

Since the toroid resembled an accretion disk, at first Nugent favored

it: “I tried to force the toroid idea on Dan, but he didn’t

go for it.” Although spectral features were nicely reproduced

when the toroid was observed edge-on, the polarization was much too

strong.

Says Nugent, “Each of these models could explain the observations,

but only from some favored point of view” — if the supernova

had a peculiar history, say, or were oriented in a special way. For

example, the tilted elliptical shell would require a white dwarf spinning

unusually rapidly and exploding off center.

|

|

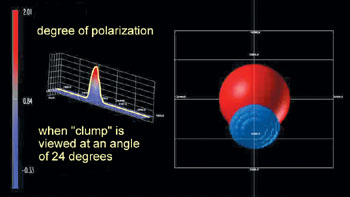

| Top:

The toroid model of SN 2001el most resembled an accretion disk,

but the model did not match observations of the supernova's polarization

features. Bottom: Of the four geometries modeled for SN 2001el, the spectral and polarization features of the "clumped sphere" closely matched observations when the clump (blue) was seen offset from the photosphere (red) by about 24 degrees. |

The best fit was the so-called clumped shell. The physical picture is of a blob of calcium blowing away from the photosphere. Other clumpy scenarios are possible, all of them reasonable.

While SN 2001el yielded much new data, the models underscored just how limited information still is; observations don’t always catch the features modelers want. “Blue-shifted calcium is often overlooked, for example,” says Nugent. “I suspect high-velocity calcium may occur often, but you have to catch it early — it goes away fast.”

Kasen adds that “this clump has other stuff in it. We need to look at the whole spectrum, all the way out, before the supernova reaches maximum brightness.”

Clumps may even come from the companion star. Says Kasen, “People tend to forget about this, but in a binary system an explosion is going to run into the companion in just a few minutes. The 2-D models that have been done suggest the companion carves out a cone in the explosion.”

This effect suggests “a model that already fits the total observed asymmetry” of many Type Ia supernovae, Nugent says. He cites SN 1991T, hot and blue with very weak spectral features, possibly seen looking down the hole in the blast carved by the companion.

Supercomputer time and many more nearby supernovae are both needed to answer the intriguing new questions raised by Nugent and Kasen’s 3-D models.

More information about the shapes of exploding stars is available online on “Science Beat,” at http://enews.lbl.gov/.

Symons Speaks at ‘Atoms for Peace + 50’

BY PAUL PREUSS

|

|

| Symons |

On Oct. 22 James Symons, director of Berkeley Lab’s Nuclear

Science Division, joined a group of distinguished speakers in Washington,

D.C. to commemorate Dwight Eisenhower’s “Atoms for Peace”

address to the United Nations in December of 1953.

“An interesting thing about the conference,” Symons notes,

“was that the times when Eisenhower spoke weren’t so different

from today, with tensions in Europe and the Far East and worries about

the spread of nuclear technology.”

The U.S. had exploded the world’s first thermonuclear device, big as a building, in 1952, just four days before Eisenhower was elected. In August of 1953, the Soviet Union tested its first “H-bomb”; although only part of its yield came from fusion, the package was no bigger than the bomb that destroyed Nagasaki.

In this crisis atmosphere Eisenhower proposed creating a UN international atomic energy agency that would “apply atomic energy to the needs of agriculture, medicine, and other peaceful activities” and “provide abundant electrical energy in the power-starved areas of the world.”

|

|

| In 1953, President Dwight Eisenhower delivered his "Atoms for Peace" address to the General Assembly of the United Nations. | |

Speakers at the “Atoms for Peace +50” event, sponsored by the Department of Energy and the Eisenhower Library, discussed the political context of Eisenhower’s address, the current role of nuclear power in U.S. energy policy, progress in nuclear medicine, and efforts to control proliferation, among other topics.

Office of Science Director Raymond Orbach chaired a panel on scientific horizons, at which Symons summarized prospects for nuclear science research as of 2003. Other speakers in this session covered particle physics and astrophysics.

In his 15-minute talk Symons used neutrino science to illustrate the evolution of the field, taking his cue from the DOE Fermi Award presented to neutrino pioneers John Bahcall and Raymond Davis later the same evening. Symons discussed the contributions of facilities like Brookhaven’s Relativistic Heavy Ion Collider (RHIC) to understanding matter in the earliest moments of the universe and made the case for new facilities, including the national underground laboratory (NUSEL) to further neutrino studies and the Rare Isotope Accelerator (RIA) to investigate everything from nucleosynthesis to stellar explosions.

“Eisenhower’s view of how to face the problems of the fifties was to get people to work together to solve them,” Symons remarks. “It’s an approach with relevance to our situation today.”

Utah high-schoolers continue annual pilgrimage to Lab

BY D. LYN HUNTER

|

|

| Peggy McMahon explains the 88-Inch Cyclotron to a group of high school students who visited the Lab last week. |

Traveling from Utah to the Bay Area may seem like a long way to go to learn about science, but the trip is the event of the year for seniors at Logan High School, located about 80 miles northeast of Salt Lake City in a small town of the same name.

Their visit last week was the latest in an annual pilgrimage that Logan science teacher Herond Hoyt organizes for his 12th grade students. “We started about 14 years ago with 10 students,” Hoyt says. “But as word of how fun the trip was spread, more and more students wanted to come. Now we bring about 24.”

Students put their name on a waiting list as early as their freshman year to get a chance to travel westward with Hoyt and a few other Logan teachers. And while experiencing the sights and sounds of big-city life in the Bay Area is a thrill for these youngsters, their trip is grounded in science.

Packed in rented vans, the kids arrived at the Lab last Thursday afternoon. They were wowed by the hill’s panoramic views as they traveled up to the National Center for Electron Microscopy. When they hopped out of their vehicles, they were immediately drawn to the trees outside Building 72. “Look, are these redwoods?” the group wondered out loud as they rubbed their hands over the rough bark.

Once inside, the teens were treated to a presentation by NCEM’s Doug Owen, who talked about the center’s atomic resolution microscope and how it is used to take pictures of atoms. “We can magnify them up to 50 million times,” he explained. “That’s like taking a basketball and blowing it up to the size of the world.” He encouraged the students to stick with their science education and come back to NCEM when they’re older to try out their ideas.

Afterwards, the high schoolers made a stop at the 88-Inch Cyclotron and got a tour from Peggy McMahon, who showed them the plasma ion source, the control room, and target caves where the Gammasphere used to be stored.

The students also visited Stanford and attended some cultural events in San Francisco during their stay in the Bay Area. The trip costs around $619 per student.

When asked why he makes this trip year after year, Hoyt replied: “I want to give the students the best science experience possible, and to get them out of their element to see something new. It also helps give them exposure to advanced study and careers in science.“ One student put it a bit more succinctly: “This is fantastic!”

Worms, and Viruses, and Zombies (oh, my!)

Computer Protection manager Jim Rothfuss shares what it’s like on the cyberwar front lines

Q: How does a virus, worm, or other bug infect a system or a machine?

|

|

|

|

|

|

|

|

A: The difference between a virus and a worm used to be fairly clear, but in recent years it’s been harder to tell. They use each other’s methods. Worms usually go out and look for vulnerabilities in the system and jump from machine to machine. Viruses traditionally transfer through e-mail and drop their payload, which might cause internal damage when the user runs the program. Nowadays, many of these malicious programs have attack characteristics of both worms and viruses.

The problem is getting worse. There are people with programming skills who are creating virus-writing programs. “Script kiddies” don’t have a lot of computer skills but know how to download virus-writing programs and make their own custom viruses.

There’s also speculation that another motive is appearing — money. It used to be that most of these hackers were just trying to gain nefarious recognition. The recent SoBig worm has an engine built into the virus that sends out spam. A lot of folks see this as an indication that we may see hackers eventually selling advertisers their ability to spam via viruses and worms.

We also have seen hackers leaving behind “backdoors” and “zombies,” latent programs that the hacker installs to attack at a later time. A worm might spread to 1,000 machines and leave a zombie program on each one. The zombie might be brought back from the dead weeks, months, or even years later by triggering it with a command from another system or with another worm. So thousands of machines can become attackers in a matter of seconds.

Q: So do you see a light at the end of the tunnel? Will technology be able to win the fight against hackers and troublemakers?

A: I tend to have a philosophical view. Troublemakers have been around since the beginning of time. It’s like asking if we will eventually have peace on earth and will all wars cease. Probably not. A lot of people draw comparisons between safety and security, and there are many similarities. But there is one big difference. You can make rules about safety that will never change, and if people follow them, they will generally be safe. But with security, it’s different. You have an active, intelligent adversary out there, and no matter what you do or what rules you make, the adversary is working to undermine your efforts. We’ll never have a static technology that will make things absolutely secure. All we can do is try to stay a few steps ahead.

Q: How does Berkeley Lab arm itself against intruders?

A: One of our stalwart protections is the “Bro” system for intrusion detection and protection. It was started by Vern Paxson as part of a network research project around 1996. Mark Rosenberg, the part-time protection manager at the time, collaborated with Vern to put it into operation on LBLnet. It was one of the earliest detection systems used in a real operational environment. Bro has allowed us to protect the Lab from intruders while keeping the network open to the Internet.

Another program we’re working on is the Network Equipment Tracking System (NETS). We know intrusion protection alone cannot keep up with the attacks. Bro is more of a response mechanism, where a person sees an attack and responds. NETS will try to manage protection at the host level — the individual computer. Even if the attack gets past the perimeter, Lab computers will be protected. We envision that every computer will automatically get a routine scan for vulnerabilities, and if it’s not safe, it will be disconnected briefly before an attack code has a chance to find and compromise it. We’re working on that.

Q: What about spam? Is there anything the Lab can do to reduce the influx?

A: Mark Rosenberg’s group (computing infrastructure technology) does a great job with this. They run both the virus wall and spam wall on the e-mail systems, and together, these two programs strip out a lot of garbage. They’ve recently put a new spam wall in place that not only looks at who is sending the spam letters, but also looks at the content, which is much more effective. As far as what an individual might do, there’s not much. It’s like junk mail at home and sales calls at dinnertime. My advice is to try to keep yourself off as many e-mail lists as possible. That’s one of the main places spammers get the addresses.

Q: Your team also regulates the inappropriate use of Lab computers. What is the most frequent misuse and what are the consequences?

A: During the summer when there’s an influx of students, incidents of copyright infringement for music and DVD downloads go way up. Bro watches traffic in and out, and certain “signatures” trigger an alert. Next year, we plan to inform students when they come in that this isn’t kosher. We have the technical means of detecting this stuff, but I feel strongly that we not make a judgment on that activity. If we see something that appears to be out of compliance with Lab policy, we send a notice to the person we think owns that computer and to his or her supervisor, and it’s up to line management to deal with the problem.

Q: You worked security at Livermore, a classified lab with sensitive defense work. How does that job differ from computer protection at Berkeley, where things are unclassified?

A: In a classified lab the stakes are higher, and the damage can have devastating potential. Their aversion to risk means that only tried and true methods are used — maintaining a firewall, for example, to keep intruders out. Here, security is always a trade-off between possible damage and functionality. We have the ability to maintain a higher risk than a weapons lab, hence the ability to support a more open network. One thing that I have enjoyed about Berkeley Lab is the ability to take a calculated risk and try out exciting new methods of cyber protection.

My mantra at Berkeley is to protect the mission of the Laboratory, which is the Lab’s ability to do research. That is what affects my decisions. A firewall might be the best way to secure computers, but it may not be the best way to promote the Lab’s mission. Computers and the network are scientific tools and you can’t just secure the tools to the point that they’re unusable, but you can’t let malicious hackers ruin the tools, either.

|

|

- AUTOS & SUPPLIES

95 HONDA ACCORD EX, 4 dr, white, spoiler, at, 6 CD, exc cond, $4,500, Rick, X5597, (530) 265-8875

93 FORD ESCORT WAGON, white, 98K mi, 2 owners, all records, good cond, ac, fm/am/cass, well maint, $3,000/bo, Simon, 846-9160, (925) 377-0453 home

92 TOYOTA PASEO, 2 dr, 5sp manual, 162K, $2,200/bo, looks/runs great, Tomek, X6276, 233-4639

92 MAZDA MX 3, red, 146K mi, 2 dr, 5 sp, very clean, cruise, am/fm/cd, all pwr, ac, sunrf, records, $3,100/bo, Guy, X4703, 482-1777

73 MERCURY MARQUIS BROUGHAM, 4 dr, orig light green w/ dark green roof, 429 engine, 3.5K mi on new engine, 2nd owner for 20 years, all pwr, HVAC, huge trunk, white sidewalls, seatbelts for 6, always mech maint, interior in pristine cond, $2,500, Stephanie, (415) 454-9810, cell (415) 246-3068

HOUSING

ALBANY, fully furn, by mo/semester, quiet, huge, eleg 2/2 condo on hill w/ view in well-kept secure complex, avail now, swim pool, 2 car garage, exercise rm, close to BART/shops/restaurants, 848-1830 [email protected]

BERKELEY, furn studio apt at Walnut/Cedar, walk dist to campus, $975/mo, kitchen w/ eating area, bthrm, liv rm w/ dble bd, 4 unit building, onsite laundry & garden, [email protected], Akos, (925) 258-0237 or (925) 352-4133

BERKELEY, 2 bdrm/1 bth apt, easy walk to BART, Nomad Café, w/d, dishwasher, offstr parking, hardwd flrs, $1,690, for photos & flr plans see http://6500shattuck.com, Ian, 6500shattuck@ earthlink.net

BERKELEY, new, spacious 3 bdrm/1.5 bath apt, easy walk to BART, w/d, dishwasher, offstr parking, hill views, hardwd flrs, $2,190, http://6500shattuck.com/, Ian, [email protected]

BERKELEY, cheerful light in 2 bdrm/1bth house, arts & crafts built-ins, easy walk to BART, $1,960, http://6500shattuck.com/, Ian, [email protected]

DOWNTOWN BERKELEY, lge studio, new bldg, 1/2 blk to campus/lab shuttle/pub trans/shops, modern, luminous, w/d, parking, elev, cable TV, wireless internet, shared terrace, Allston Way nr Oxford & Shattuck, $1,350/mo, slopez01es@ yahoo.es

LIVERMORE, 2bdrm house in Springtown, 1bth, 900 sq ft, fenced yard, attached garage, quiet street nr golf course, avail 12/01, $1,400/mo, $1,400 sec dep, credit report, refs, leave msg, Kevin, (925) 833-1668

NORTH BERKELEY, by week/mo, fully furn 1/1 flat, quiet, spacious & comfort, laundry rm, priv garden, gated carport; walk to lab shuttle/UCB/pub trans, BART, downtown Berkeley, 848-1830, [email protected]

NORTH BERKELEY, lovely bed & breakf, plus packed lunch or dinner, ($850/mo w/ lunch or dinner or $750/mo B&B only), in heart of North Berkeley, walk dist to lab shuttle, great neighbrhd, share bth, completely furn w/ tv, phone lines, linens, util incl, small swiss chalet rm in garden, $750/mo, extra lge rm in house, $850, 1 pers per rm, Helen, 527-3252

ROCKRIDGE AREA, beautifully-furn rm in priv house, walk to College Ave, BART, Lab shuttle, great neighbrhd, $600, other rooms avail, 655-2534

SOUTH BERKELEY, partially furn 2 bdrm/1 bth craftsman bungalow, 1,250 sq ft, renovated upper unit, hrdwd flrs, fp, yards, deck, dw, laundry, nr BART/UC/shops, $2,100/mo, 1st + last + $1,000 dep, pets ok, no smoking, Amy, X5044, 847-5711

WALNUT CREEK TOWNHOUSE nr BART, 2 bdrm/2-1/2 bth, end unit w/ fp, detached garage, 2 patios, central ac, located in Sunset Park, pool & tennis, $1,500/mo, Bob (925) 376-2211, [email protected]

MISC ITEMS FOR SALE

BOOKCASE, country style, natural oak, three shelves, 29.5”x11x44.5”, $50, exc cond, Frank, 864-7090, [email protected]

BUNK/TWIN BEDS, solid oak w/3 storage drawers, incl mattresses, $250; student desk, $20; 6 drawer dresser oak veneer $40; all for $285, Regine, X2915, 653-1654

CELSIUS RM EVAPORATIVE AIR COOLER & HUMIDIFIER, like new, home depot price over $100, asking $35/bo, Ed, 339-3505

KITCHEN TABLE w/leaf & 4 chairs, looks new, ex cond, $125; 42-in round table w/ laminated top, $20; metal typewriter table w/ one side drop leaf $25, John, 531-1739

WASHER & DRYER, Maytag Neptune, purch in 1999, only used 3 yrs for 2 person family, $1,000, cash only, in storage currently, Susan, X5845 or (925) 461-3008

SF GIANTS PARTIAL SEASON TICKETS, pair of great seats at PacBell Park, (3rd base, lower deck, row 29, sec 127), wish to share, 1/4 or 1/8 share, 10 or 20 games for next yr, no seat license fee, Peter, (415) 641-0776

SF JAZZ TICKETS, great seats, K. Jarrett with G. Peacock & J.DeJohnette, Sun, 11/09, 8 pm, $110/pr, Erika, X4930, 848-8173

SOUND SYSTEM, KLH speakers, AKAI receiver, Technics CD player, dual record player, teak cabinet, make offer, Edward, 525-0531

TWO WHITE BOOKSHELVES w/4 adj shelves $35 ea; bdrm furn, $800 for 5 pieces, open to neg; computer table, $100; tall qooden CD holder, $15; cream couch set, good cond, $60; blk dining set w/ 4 cushioned chairs, $125; 2 crystal bedside lamps, $20 pair, Sreela, X4391, 655-3295-

OTHER

HARVARD BAND/HRO alumni wish to play at Harvard-Stanford basketball game, Dec 28 at Stanford, we have music, can get instruments, Mark, X6581-

FREE

Basketball rack on wheels, Duo, X6878, 528-3408-

VACATION

PARIS, FRANCE, nr Eiffel Tower, furn eleg sunny 2 bdrm/1 bth apt, avail year-round by week/mo, close to pub trans/shops, 848-1830, [email protected]

TAHOE KEYS at South Lake Tahoe, house, 3 bdrm, 2-1/2 bth, fenced yard, quiet sunny location, skiing nearby, great views of water & mountains, $195/night, 2 night min, Bob (925) 376-2211, [email protected]