Quark

Matter Scientists Make Headlines in Oakland

Molecular Foundry Groundbreaking

Represents New Thrust in Science

Quark Matter Scientists Make Headlines in Oakland

BY LYNN YARRIS

|

|

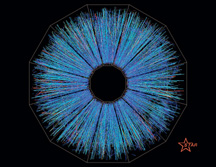

| Quark Gluon Plasmas may be produced in the fireballs of high-energy collisions between atomic nuclei such as this collision of gold ions recorded by the STAR detector at Brookhaven Lab's Relativistic Heavy Ion Collider. |

“Can we increase the power of our knowledge without also increasing our wisdom? How do we decrease the growing divide between knowledge and wisdom? That is the challenge I leave you with,” said Oakland Mayor Jerry Brown in welcoming nearly 700 physicists from around the world who gathered at the Oakland Marriott City Center, from January 11 through January 16, for a conference called Quark Matter 2004.

Hosted by Berkeley Lab, Quark Matter 2004 brought together theorists and experimentalists to discuss the ongoing search for the quark gluon plasma (QGP). This ephemeral, ultradense form of matter, which existed in the first few microseconds after the Big Bang, is believed to have set the stage for the stuff that makes up our universe today.

Quarks are one of the basic constituents of matter. Gluons are carriers of the strong force that binds quarks together to form, for example, the protons and neutrons of atomic nuclei. In the ordinary matter of today’s universe, quarks are never free of other quarks or gluons. However, in experiments at powerful particle accelerators, such as the Relativistic Heavy Ion Collider (RHIC) at Brookhaven National Laboratory (BNL), collisions between high-energy beams of atomic nuclei create incredibly dense fireballs in which temperatures reach nearly one trillion degrees above absolute zero and pressures become incomprehensibly enormous.

Under such extreme conditions, comparable to those first few micro-seconds after the Big Bang, the ties that bind quarks and gluons are expected to melt, creating a plasma of free-floating individual particles as different from ordinary matter as water is from ice or steam. The QGP created in the aftermath of the Big Bang immediately cooled to form the state of matter we think of as “ordinary.” By recreating a QGP in particle accelerators and studying its properties, scientists hope to gain new insights into how our universe was formed and a better understanding of the behavior of atomic nuclei.

Mayor Brown’s question to the attendees of Quark Matter 2004 proved to be in keeping with the overall tone of the conference. Scientific discussions sometimes seemed to veer towards the philosophical as participants grappled with the issue of recognizing the QGP once it has been produced. Several newspaper reports on Quark Matter 2004, including three in the New York Times, suggested scientific confusion as to precisely what a QGP is. Not true, as Hans Georg Ritter of Berkeley Lab’s Nuclear Science Division (NSD), and one of the principal organizers of Quark Matter 2004, explains.

“The quark gluon plasma is well-defined,” he says, “but the experimental measurements are complicated and subject to interpretation. We believe we know at what temperature and density a quark gluon plasma will occur, but we have no way of directly measuring the temperature and density inside a nucleus-to-nucleus collision.”

The result, Ritter says, is that scientists must infer the creation of a QGP based on interpretations of experimental data. The lack of agreement on the QGP’s signature has led to subjective interpretations. Some scientists, such as Miklos Gyulassy, formerly of Berkeley Lab and now with Columbia University, argue that the evidence for a QGP having been produced at RHIC is “overwhelming.” Others, such as Ritter, take a more cautious approach, saying “if you want a proof based entirely on experimental facts, we are not there yet.”

|

|

| Oakland Mayor Jerry Brown welcomed an international group of scientists to the Quark Matter 2004 conference by praising the power of knowledge tempered by wisdom. | |

NSD theorist Xin-Nian Wang, another principal organizer of Quark Matter 2004, also downplays the fact that there were no major announ-cements at the conference, and the idea that scientists are confused over what a QGP is.

“I don’t think you could find a scientist at the conference who does not believe that a quark gluon plasma has been produced at RHIC,” he says. “QGP is the most efficient explanation of the current experimental data, but the scientific process requires us to eliminate other possible explanations for the experimental results before declaring that the quark gluon plasma has been found.” Expressing his own optimism, Wang adds, “We are moving in that direction.”

Ritter and Wang agree that a great amount of scientific progress has been made in recent years at both RHIC and CERN. New phenomena have been observed and their properties are being studied. This, in turn, has yielded new information on the structure of the QGP, especially at the transition phase which, according to Wang, is proving “even more complicated” than theory predicted.

Before the start of its scientific program, Quark Matter 2004 featured a Sunday afternoon workshop for teachers which was organized by NSD’s Howard Matis and Peter Jacobs.

“The idea was to show a broader community what is being done in our field,” says Matis. “One of the best ways to do this, we think, is through teachers and their students.”

Matis and Jacobs rounded up speakers known for their ability to

communicate science to nonexperts. Talks were given on the evolution

of science to modern physics; particle accelerators and detectors;

the relationship between subatomic particles, atomic nuclei and the

cosmos; and what happens to atomic nuclei at a trillion degrees. Nearly

60 teachers and students attended the workshop, mostly from Bay Area

high schools and community colleges, but one teacher came from as

far away as Oregon.

PowerPoint presentations of many of the scientific talks at Quark

Matter 2004 and all of the talks given at the teachers’ workshop

can be downloaded from the conference Website at http://qm2004.lbl.gov/.

Molecular Foundry Groundbreaking Represents New Thrust in Science

BY RON KOLB

|

|

| Construction begins on the Molecular Foundry, between Building 66 and NCEM, and will continue through 2006. |

Next Friday, Jan. 30, will mark the official beginning of what could be the shaping of a new research character for Berkeley Lab in the coming years.

The Molecular Foundry building, for which a VIP lineup will break ground in an outdoor ceremony (weather permitting) at 11:30 a.m., represents a whole new thrust and direction for doing science, according to Deputy Director Pier Oddone.

“It will be a new kind of facility for us,” he said. “It’s not accelerators, and it’s not a computing center. It’s more subtle. It takes many different techniques to develop nanoscience, seeking an understanding of complicated things, all backed by theory. This facility will have six labs, all integrated with a cadre of experts who can help people throughout the country to work on these problems.”

In addition, the Foundry will be “a portal into our other facilities,” he said, pointing especially to the Advan-ced Light Source, the National Center for Electron Microscopy, and the National Energy Research Scientific Computing Center (NERSC) — all essential to characterizing nanosystems.

The work has already begun on the site of Berkeley Lab’s first major new research structure since the Genomics Building (84) was opened in 1997. But next Friday marks the ceremonial start of the project. All Lab employees are invited to attend the program, scheduled for the lower parking area behind Building 66. (If it rains, the ceremony will be moved indoors to the 66 auditorium, and seating will be on a space-available basis).

|

|

| Rep. Mike Honda will speak at the ceremony | |

The event is being staged in conjunction with the Bay Area Science and Innovation Consortium (BASIC), an action-oriented collaboration of the region’s major research universities, national laboratories, independent research institutions, and research and development-driven businesses and organizations. The Lab is an active member of BASIC and helped to prepare the report Nanotechnology in the San Francisco Bay Area: Dawn of a New Age, which will be released after the groundbreaking.

Featured speaker will be Rep. Mike Honda (D-San Jose), a strong supporter of regional nanotechnology development. He was cosponsor of the Boehlert-Honda Nanotechnol-ogy Act of 2003, which authorizes $3.7 billion over the next three years for nanotechnology research and development programs at the National Science Foundation, the Department of Energy, the Department of Commerce, NASA, and the Environ-mental Protection Agency. The bill has passed the House and the Senate and was signed by the President.

BASIC is sponsoring an afternoon forum “The Architecture of Nano-science in the Bay Area: Education, Research and Industry,” in the Building 66 auditorium from 1 to 2:30 p.m. Among the forum panelists are Berkeley Lab scientists Paul Alivisatos and Carolyn Bertozzi.

The $85 million Molecular Foundry building will be a 94,500-square-foot, six-story lab and office complex on a hillside site between Building 66 and NCEM. It is due for completion in 2006.

Say Goodbye to EPB Hall, and Hello to New Hillside Vista

|

|

| Using golden hammers, this group kicked off the EPB demolition. They include (left to right) Deputy Directors Pier Oddone and Sally Benson, DOE Site Office Manager Dick Nolan, Lab Director Charles Shank, Facilities Director George Reyes, and Demolition Manager Wayne Evans. |

“We decided to have the demolition project equivalent to a groundbreaking,” said Goglia, project director for the deconstruction of the Bevatron’s old external proton beam (EPB) experiment hall. “We called it ‘It’s a Hit!’”

So various VIPs with gold hammers, led by Lab Director Charles Shank,

congregated at a vertical steel column last Friday and took their

respective shots at history, marking the official start of a project

that will dramatically change the visual landscape of Berkeley Lab.

The hall, an industrial high-bay with massive metal plates and fiberglass

sections, is one of the first dominating sites that greet visitors

coming through the Blackberry Gate. It is also clearly visible from

downtown Berkeley, especially along Hearst Avenue.

By the end of March, it will be gone.

Built in 1967, the hall was a structurally independent addition to Buil-ding 51, which housed the Bevatron accelerator. After the Bevatron shut down in 1993, the experimental equipment and concrete shielding blocks were removed. In recent years, areas in the EPB hall have been used for miscellaneous storage and as staging areas.

Actual demolition and removal will be completed by a subcontractor, Evans Brothers, Inc., an experienced local demolition company founded in 1976. Project Manager Richard Stanton says, “Evans Brothers has a challenging task over the next few months. They’ll take down a structure that’s about six stories high and the size of a football field, in the middle of a busy laboratory site.”

Facilities Director George Reyes and his team successfully captured half of a $3 million special deconstruction fund in the Department of Energy’s Office of Science to pay for the demolition. “Since the EPB Hall’s removal will free up space for potential future activities, it was an ideal candidate to help the Lab meet a recently established DOE policy,” Reyes says, “which requires each square foot of new construction to be balanced by the transfer, sale, or demolition of an equivalent amount of excess space.”

Lab management hasn’t reached a decision yet on long-term use of the new space, but some of it will be used initially for staging and storage, parking and other interim functions. Its central, flat location makes it ideal for various options.

The hall project does not include the demolition of the entire Bevatron complex, which is expected to cost between $65 million and $81 million. The Lab has submitted requests to the DOE for such funding, to fulfill what Director Shank has described as his “crusade” to recapture this valuable real estate “and return it to useful earth.”

According to Lab Superintendent John Patterson, drivers and pedestrians should be careful for the next 12 to 16 weeks, during which trucks will be making up to 25 trips per week. Rush hour periods will be avoided, and traffic control will be in effect, as necessary.

“We are looking forward to the safe and timely completion of Evans Brothers’ work in the spring,” says Reyes, “and a little more open space to work with.”

Short Takes

The Muscle Behind Lab Operations

|

|

| Plant Maintenance Technician Edward Miller works on a leaky steam boiler. | |

The cutting-edge research conducted here at the Lab has put us on

the world‘s scientific map. But without the numerous men and

women of the Facilities Division, it would be difficult, if not impossible

to produce these important discoveries.

What does it take to keep the Lab up and running? Here are statistics

on just some of the employees needed to make sure water is running,

lights are working, supplies are on hand, offices are clean, and buildings

are safe:

-

Plumbers 5

Painters 5

Electricians 19

Custodians 40

Carpenters 18

Gardeners 3

Maintenance 26

Architects/Engineers/Project Managers 39

A New Club on the Hill

|

|

| Larry Guo |

While the Lab has employee organizations devoted to everything from dancing and karate to archery and ultimate Frisbee, there hasn’t been (in recent history) a group for staff of Chinese and Asian descent. Now there is, thanks to Larry Guo, with the Human Resources Department.

Starting last year, he organized informal gatherings with other Asian employees. After several of these functions, the group decided to make it official and create their own club: the Berkeley Lab Chinese and Asian Association.

“We estimate that there are more than 300 Asian employees and guests currently working at the Lab,” says Guo. “We’d like to bring these people together so we can participate in cultural and social activities as a group of people with a shared background.”

Among the events planned are weekend trips to national parks, sports tournaments, Karaoke parties, and lectures. An annual award ceremony for “the Best Asian Scientist at the Lab” will also be held.

The new club will host its first event this Saturday from 11:30 a.m. to 5 p.m. in Perseverance Hall, located in Building 54. For more information, contact Guo at [email protected].

|

|

This Month in Lab History

In January of 1963, the Lab’s police officers came home victorious after winning first place at a recent intramural pistol competition. The “shoot-off” between Berkeley, Livermore and Site 300 used to be an annual event that took place at Site 300 in Livermore. The proud marksmen are, from left to right, Ken Webb, Roy Power, Bob Tuner, and Don Rice.

Playing Keep-Away With Carbon

At the end of 2003, the American Geophysical Union issued an unequivocal message: “Human activities are increasingly altering the Earth’s climate . . . A particular concern is that atmospheric levels of carbon dioxide may be rising faster than at any time in Earth’s history, except possibly following rare events like impacts from large extraterrestrial objects.”

|

|

| Karsten Pruess (left), Tianfu Xu (center), and John Apps used TOUGHREACT to model CO2 in saline aquifers. |

Barring an asteroid strike, human production of CO2 is not likely to slow down soon — and there’s an urgent need to find somewhere besides the atmosphere to put it. Sequestration in the ocean and in soils and forests are possibilities, but another option, sequestration in geological formations, already has a head start.

“The likeliest places to store CO2 are depleted oil and gas reservoirs,” says Karsten Pruess of Berkeley Lab’s Earth Sciences Division, who notes that porous reservoir rock can soak up gas, and caps of impermeable rock can seal that gas in place over geologic lengths of time. “The very existence of natural gas in a reservoir proves it is capable of long-term storage.”

Unfortunately, says Pruess, “depleted reservoirs don’t

have enough capacity to store the huge amounts of carbon we’re

producing. And often they’re too far from stationary sources,

like power plants and refineries, where it’s practical to collect

CO2.”

An attractive storage alternative to depleted reservoirs is saline

aquifers. In these formations, beds of porous sandstones containing

very salty water — with a salinity comparable to that of sea

water, thus not a potential source of drinking water — alternate

with impermeable shales.

A natural laboratory

Few places on Earth have a higher concentration of refineries, power plants, and other fixed CO2 sources than the Gulf Coast of Texas. At over 160 million metric tons (in 1999), Texas produces almost twice as much CO2 as runner-up California among U.S. states, more than the entire United Kingdom.

These numerous sources of recoverable carbon waste sit atop enormous beds of deep saline aquifers, laid down in the Tertiary Period by rivers flowing towards what nowadays is the Gulf of Mexico. Pruess and other members of the Earth Sciences Division have partnered with the Texas Bureau of Economic Geology to develop computer models that can better simulate what’s likely to happen when carbon dioxide and other byproducts are injected into these formations.

|

|

| The Houston Ship Channel is home to numerous CO2-producing industries. |

Modeling is more complex than simply calculating the movement of CO2 through sand and rock. Storage capacity depends not only on a formation’s porosity and other structural properties but on its chemistry. And few industrial sources emit pure carbon dioxide; instead, the CO2 is likely to be mixed with other acidic gases, including such unpleasant contaminants as hydrogen sulfide and sulfur dioxide.

TOUGHREACT, a new version of the veteran TOUGH2 simulation program, was developed by Pruess and his colleagues Tianfu Xu, Eric Sonnenthal, and Nicolas Spycher to address processes like acid mine drainage, waste disposal, and groundwater quality, where chemistry plays a critical role. Geochemist John Apps helped apply TOUGHREACT to the problem of CO2 sequestration in the Gulf Coast sediments.

“The key to modeling these systems is to incorporate accurate geochemical data,” says Apps, “and then to calibrate what the model predicts against actual experience in the field.” Apps, Xu, and Pruess presented the results of their work at the 2003 meeting of the American Geophysical Union in San Francisco.

The chemistry of the rocks

“Huge thermodynamic studies have been made to calculate the properties of minerals from laboratory experiments,” says Apps. By calculating these properties for sets of minerals, modelers aim to determine under what conditions they are stable, and when they may dissolve and exchange chemical constituents with liquid and gas phases.

Tables compiled by different researchers using different techniques may disagree significantly, however. Apps characterizes his role in applying the TOUGHREACT simulation program to CO2 sequestration as one of “looking at the chemical systems, identifying the minerals involved, calculating their thermodynamic properties, and making sure there is internal consistency among all the minerals in the system.”

It’s a task that often requires recalculating from scratch, in order to model natural conditions accurately before any CO2 is injected. The payoff is great: “Our recent simulations have been eerily similar to what we observe in the field.”

“The development of these codes has always been problem-driven,” says Karsten Pruess. “Although much of our attention has been devoted to reservoir engineering and to studying the movement of wastes and pollutants, the recent work with the Gulf Coast saline aquifers has addressed the important question of whether we can use these codes to model natural systems. And indeed, we’ve shown that TOUGHREACT simulations handle these very well.”

It would be hard to overstate the potential benefits. According to the Department of Energy’s Office of Fossil Energy, deep saline formations in the United States may have the capacity to store up to 500 billion metric tons of carbon dioxide — enough to store all the CO2 produced in the country, at present rates, for a century or more.

Using Glass Beads to Detect Molecular Interactions

BY DAN KROTZ

|

|

| Jay Groves’ colloid research could expedite the development of drugs that target cell membranes. |

Berkeley Lab and UC Berkeley researchers have developed a fast, cheap, and highly sensitive way to detect molecular interactions without using sophisticated equipment. Their technique, which uses thousands of microscopic glass beads coated with a substance that mimics a cell membrane, opens the door for the high-throughput evaluation of an ever-growing family of pharmaceuticals that fight diseases by targeting membrane-bound receptors.

As described in a recent issue of Nature, the technique takes advantage of the unique properties of a colloid, a substance consisting of tiny particles suspended in a liquid. Given the right conditions, a slight change in energy can trigger a colloid to undergo a phase transition, in which short-lived clumps of particles disperse like the opening shot of a pool game. In this case, the tiny particles are silica beads, and when proteins bind to receptors embedded in their membrane-like outer layer, the beads scatter — a phenomenon that can be easily seen with a microscope.

“We position the colloid to teeter on the edge of a phase transition, so the slightest change, like the binding of proteins to receptors, causes the beads to spread out,” says Jay Groves of the Physical Bioscience Division and a professor of chemistry at UC Berkeley, who conducted the research with fellow UC Berkeley chemists Michael Baksh and Michal Jaros.

In this way, something as minute as a molecular interaction sparks a major change in how the entire population of ever-moving beads aggregates. This advantage, called signal amplification, means protein-receptor interactions can be detected with a low-magnification microscope, and this means drug candidates that target protein receptors can be evaluated with a fully automated process.

“The colloid system can be implemented into existing high-throughput screening protocols,” says Groves.

Other ways of determining how proteins latch onto cell membrane receptors involve labeling molecules with fluorescent markers, which is very costly and time-consuming, or using sophisticated equipment like a surface plasmon resonance spectrometer, which is not very sensitive. Because of their drawbacks, the techniques loom as a bottleneck in the evaluation of thousands of molecules that could potentially inhibit a receptor’s ability to bind with disease-causing proteins.

For example, approximately 60 percent of today’s prescription drugs target a class of signaling receptor called a 7-transmembrane helix protein. Although these drugs represent a huge market, averaging $100 billion annually, researches have so far only determined how a small minority of the myriad types of 7-transmembrane helix proteins bind to receptor-specific molecules, also called ligands. As Groves explains, there is tremendous interest in finding ligands that bind with the remaining majority of poorly understood transmembrane proteins, or so-called orphan proteins.

“This would significantly advance development in this well-established goldmine of drug targets,” says Groves.

What’s needed is a high-throughput way of exploring these ligand-protein interactions, both to identify the ligands and to screen for molecules that compete with them. Groves envisions a process in which hundreds of colloid suspensions are created, each composed of glass beads coated with membranes containing different types of 7-transmembrane helix proteins. Next, a robot dispenses these colloid clusters into tiny wells, and potential ligands or ligand competitors are then added to each well. To determine if an interaction occurs, the colloids are scanned by an automated microscope designed to image thousands of samples in rapid succession. If a colloid’s beads scatter — something computer-assisted image analysis can detect — then the ligand or ligand competitor has latched onto the transmembrane protein.

“The entire process wouldn’t require sophisticated instruments,” says Groves. “High-throughput systems, such as the those used by pharmaceutical companies, could run our assay at a rate of 100,000 measurements per day.”

In addition to expediting drug development, the technique could be used to diagnose the presence of membrane-targeting toxins such as anthrax, botulism, cholera, and tetanus. And because the majority of biochemical processes occur on cell membranes, it can also be applied to fundamental cell biology research.

Groves’ colloid technique isn’t his first foray into simplifying the study of cell membranes. His MembraneChip, a device developed a few years ago, detects protein-receptor interactions by attaching a cell membrane to a silicon electronic chip.

“I saw in these colloids a faster variation to the MembraneChip™,” says Groves. “And instead of powering the device with electricity, colloids use ambient thermal energy. It’s a power-free, label-free, and instrument-free system.”

200

Terabytes a Month and Growing: Johnston Sheds Light on

Life at Esnet

Its traffic volume is doubling every year, and currently surpasses 200 terabytes (200 million megabytes) per month.

Over the last decade, Johnston has served as the principal investigator of the DOE Science Grid and head of Berkeley Lab’s Distributed Systems Department. In a conversation with The View, he talks about the current state of ESnet and what to expect down the line.

|

|

| Bill Johnston |

How has your past experience led up to directing

ESnet?

This is a career change for me. I’ve spent the last 15 years

doing computer science research in distributed high-speed computing,

which really led to Grids. There were probably a few dozen of us who

formulated the idea of Grids four or five years ago. I see Grids as

just another manifestation of high-speed distributed computing, and

my interest in that field came directly out of the networking world.

I was involved in many of the early so-called gigabit test beds, the

very early attempts at building high-speed networking in this country.

How does ESnet impact scientific research?

The primary goal of the ESnet in supporting the science community

is providing the network capacity that is needed to support large-scale

scientific collaborations. The science community is finally starting

to trust that ESnet is a real and reliable infrastructure just like

their laboratories are. So what’s happened, over the last three

or four years is that the science community has actually started to

incorporate networking into their experiment planning as an integral

part of it. The high-energy physics community, for instance, could

not make the big collaborations around the current generation of accelerators

work without networking. So their plans for analyzing data are absolutely

tied to moving that data from the accelerator sites out to the thousands

of physicists around the world who actually do the data analysis.

What are some of the latest developments with

ESnet?

ESnet is two things. It is the underlying network infrastructure that

provides the physical and logical connectivity for virtually all of

DOE. It also provides a collection of science collaboration services

to the Office of Science community — videoconferencing, teleconferencing,

data conferencing, and most recently, digital certificates and a public

key infrastructure (PKI). The big milestone there is that after a

year of negotiation, we have cross-certification agreements with the

European high-energy physics community. ESnet was the first network

to achieve this authentication between the European and U.S. physics

communities, paving the way for future international collaborations.

How much data travels on ESnet, and how fast

is it growing?

We are currently at a level of roughly 200 terabytes of data a month

— that’s 200 million megabytes per month. Now, there have

been spikes that have made it look like it was growing much faster

than that. One of these spikes triggered the planning for the new

optical ring backbone. It’s a fundamental planning charge of

ESnet to track this kind of thing, together with the requirements

that the DOE program offices can predict, e.g., the ESSC (ESnet Steering

Committee) will try to predict their requirements in terms of new

major experiments coming online or new science infrastructure. In

addition to which, we predict future needs based on current traffic

trends. How-ever, that’s a risky business because if a couple

of big high-energy physics experiments come on and we don’t

know about it, that’s a very non-linear growth and increase

in traffic that may be transient.

How are advanced networking technologies a

departure from the technology they’re replacing, and what’s

coming down the pike?

From a technological point of view, it’s an evolution from an

ATM-based infrastructure to the current generation of ‘pure

IP networks.’ So we have these optical rings that directly interconnect

IP routers, and there is no intervening protocol. It’s essentially

IP directly on the fibers, or actually on the lambdas — multiple

channels of different ‘color’ light — in the case

of this ring. This ring is a Qwest DWDM (Dense Wave Division Multiplex

Optical Fiber) so on each fiber they carry either 64 or 128 10-Gigabit

per second clear communication channels. In the future, I believe

we’re likely to see a fundamental shift in the architecture

of the network and may see switched optical networks and the re-emergence

of a level 2 switching fabric in the wide area, such as the Ethernet

switches that everyone uses today in the local area networks (LANs).

What’s a good metaphor for visualizing

these types of networks?

You know when you go into a museum and they have audio wands to guide

you. They have several sets of those. You have one museum, and that’s

the infrastructure. But there may be two or three exhibits going on

at the same time. Depending on which exhibit you’re interested

in, they’ll give you a different audio wand, which will carry

you in a different route through the museum and give you a different

story about the pictures. That’s an example of overlay networks.

Which big science applications require the

most bandwidth and processing?

Most of the big traffic spikes up to this point have been created

by bulk movement of data. Increasingly, we are going to see, for instance,

high-energy physics doing distributed processing of data, using Grid-based

systems at many different sites to process a single data set. That

will generate interprocessor traffic directly across the network.

It’s a little hard to predict how that will compare with bulk

data transfers, but it will be significant. That’s sort of the

next big use — Grids coordinating miniclusters operating cooperatively

on a single set of data, where the clusters are scattered all over

the world.

What types of changes might we expect in ESnet

in the future to accommodate scientific research?

In August, 2002 we got together with representatives of the science

community in eight major DOE science areas and asked these folks,

“how is your process of science — how you conduct your

science — going to change over the next 10 years?” We

analyzed the impact on both middleware and networking. There are major

changes in store, many of them having to do with things like the climate

community. They believe the next generation of climate models has

to involve coupling many different models together. And you’re

not going to bring all of these models to one center to work together,

so you’ll have to couple them together over the network in order

to get a single, integrated model. That’s their vision, and

it relies on a lot of advanced networking and middleware.

JGI Launches Community Sequencing Program

DOE to Support DNA Sequencing for Edgy Science

BY DAVID GILBERT

|

|

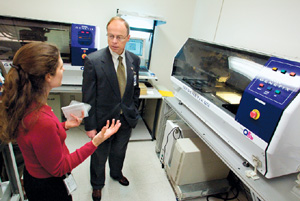

| During a recent visit to the Joint Genome Institute, Energy Undersecretary Robert Card learned about optimizing production sequencing from researcher Susan Lucas. |

The Department of Energy’s Joint Genome Institute (JGI) is poised for a resounding response to the age-old question, “If we build it, will they come?” Already, collaborators are queing up to take advantage of one of the world’s most powerful DNA sequencing facilities for the debut of DOE’s Community Sequencing Program (CSP).

“The primary goal of the CSP is to provide a world-class sequencing

resource for the expanding diversity of disciplines — geology,

oceanography, and ecology, among others — that can benefit from

the application of genomics,” says JGI Director Eddy Rubin.

The model put forward by the CSP will attract a new audience of researchers

not traditionally served by sequencing centers that focus on biomedical

applications, says Rubin. “Just as physicists and climatologists

submit proposals to get time on accelerators and supercomputers to

address fundamental questions, we are inviting investigators to bring

to the JGI important scientific challenges that can be informed with

at least 10 million basepairs of sequence. The CSP will, in effect,

cover the biosphere of possibilities.”

The Office of Biological and Environmental Research within DOE’s Office of Science will allocate roughly 12 billion bases of sequencing capacity per year through the CSP — roughly four times the size of the human genome. This constitutes more than half of the JGI’s yearly production total. Starting in February, the JGI will consider CSP applications from researchers geared toward generating informative DNA sequences from whole organisms or communities of organisms. Successful proposals, says Rubin, will be driven by meritorious science that will advance the understanding of the natural world.

Proposals submitted to the CSP will be evaluated by a group of experts from the scientific community. Once approved, DOE will cover the costs associated with the sequen-cing effort at the JGI. Data generated under CSP-supported projects will be made freely available to the entire scientific community, in accordance with JGI’s data release policy.

Founded in 1997, the JGI was initially tasked with unraveling and interpreting a large complement of the human genome chromosomes 5, 16, and 19, which comprise nearly 11 percent of the three-billion-letter human genetic code. Now in its seventh year, the JGI has assembled considerable expertise and instrumentation at its Production Genomics Facility (PGF) in Walnut Creek. JGI has grown to over 61,000 square feet of laboratory and office space and is home to over 150 researchers and support staff — all UC employees from Lawrence Berkeley, Lawrence Livermore and Los Alamos National Laboratories.

Beyond tackling human sequencing, the JGI has gone on to elucidate the genomes of such diverse organisms as the puffer fish (Fugu rubripes), a sea squirt (Ciona intestinalis), portions of the mouse genome, as well as dozens of other organisms including those responsible for fermentation, photosynthesis in the ocean, Sudden Oak Death, Pierce’s Disease (affecting the vitality of the grape-growing and wine industries) and acid mine runoff. Among those organisms currently under investigation are a species of frog (Xenopus tropicalis), the alder tree (Popular trichocarpa), and a type of green algae (Chlamydomonas reinhardtii).

The first deadline for proposals is Feb. 20. For additional information

on the CSP and the submission of sequencing proposals, visit www.jgi.doe.gov/CSP/user_guide/index.html

Ethics Focus of ‘Town Hall’ Talk with Employees

BY RON KOLB

|

|

| Sally Benson, left, Charles Shank, center, and Pier Oddone discussed Lab issues, including personal and professional ethics, with employees gathered at the Shattuck Hotel. |

Director Charles Shank reminded employees at last week’s third Executive Brown Bag meeting that there’s much to celebrate in 2004 — the rebirth of the 88-Inch Cyclotron, the Molecular Foundry groundbreaking, the start of a new research office building, and the removal of the Bevatron’s experimental hall to provide needed central lab space.

He even told his 50-plus audience in the Shattuck Hotel Towne Room

— most of whom were employees in downtown’s Building 937

— that “we want to eventually move everybody back up the

Hill.” That optimistic view was heralded by those who heard

it.

But Shank reserved the most time during the one-hour conversation

for a more sobering topic — personal and professional ethics.

Stung by incidents over the past few years that included both financial

and scientific performance problems at the Lab, the Director vowed

to explore this issue “in great depth” in the coming year.

“We have experienced lapses,” he told the group. “We need to determine what our ethical standard should be. We don’t have an ethics statement for this Lab. How can we come to terms with this? We all need to feel accountable for every sheet of paper that comes to us.”

He urged them to challenge and question the way business is done, and to express concerns about perceived problems through various established avenues, such as the “ethics line” (1-800-999-9057).

“We need to value our privilege for working here, at one of the greatest places on Earth to do science,” Shank said. “I want us to move from cynicism to pride. You need to feel good about what you do at work, and about the institution. Let’s raise our level of trust, to make this an even more desirable place to work.”

Deputy Director Pier Oddone echoed those views from a research standpoint, saying that “if there’s no integrity in science, we lose the battle. We have to hold each other responsible, and remind each other how important this is to the institution.”

That reminder may come in the form of an ethics statement that would be signed by every employee, Shank said. Busi-ness Services Divi-sion Director David McGraw is coordinating a working group that is discussing the values and behaviors that might be included in such a statement.

Shank also complimented the employees in Financial Services who “worked their tails off” to overcome various deficiencies that have surfaced there. He pledged to provide substantial support for acting Financial Officer Jeffrey Hernandez so that “we get a management and accounting system that’s up to world standards, the very best in the country.”

Anticipating anxiety about the prospect of Berkeley Lab being managed by someone other than the University of California, Shank provided an encouraging view. He said UC is in a “wonderful position” to compete for the Lab. Although no details are yet known about timetable and criteria for the Congressionally-mandated contract competition, the Director said it is “premature to worry about this. We will get through it, and UC couldn’t be in a better position (to retain the contract).”

The discussion about moving downtown people back on the Hill site caused particular excitement. Shank put his comments in the category of “hopes and dreams,” but Deputy Director for Operations Sally Benson was more specific.

She said she has asked McGraw to develop a proposal for a future administration building on the Hill that would provide “a single wonderful space for all of you.” The Lab has targeted DOE infrastructure funds to help pay for such a building.

McGraw, who is heading the Lab’s newest division, similarly reflected that his number-one priority is “developing a long-term strategy to get all of us back up the Hill. I have an acute understanding of what it’s like to run between 937 and the Hill.”

Wiener Prize in Applied Mathematics awarded to James Sethian

James Sethian, head of the Mathematics Department in the Computational Research Division and a professor of mathematics at UC Berkeley, has been awarded the Norbert Wiener Prize in Applied Mathematics by the American Mathematical Society (AMS) and the Society for Indus-trial and Applied Mathematics (SIAM). The prize, presented Jan. 8 at the joint AMS-SIAM meeting in Phoenix, is awarded for an “outstanding contribution to applied mathematics in the highest and broadest sense.”

Sethian’s award marks the eighth time the Norbert Wiener Prize has been awarded since 1970. The prize was last awarded in 2000, and one of the two recipients was Alexandre Chorin, a colleague of Sethian’s who also has a joint appointment in the Lab’s Mathematics Group and UC Berkeley’s Math Department.

“This recognition by the nation’s two leading mathematical societies is a powerful testimonial to the quality and influence of Jamie’s work,” said Horst Simon, director of CRD. “Applied mathematics is at the heart of our computational science program, and the research by James and his group members helps advance science at Berkeley Lab and at universities and national labs across the country and around the world.”

According to information distributed at the AMS-SIAM meeting awards

ceremony, Sethian was honored “for his seminal work on the computer

representation of the motion of curves, surfaces, interfaces, and

wave fronts, and for his brilliant applications of mathematical and

computational ideas to problems in science and engineering.”

His work has influenced fields as diverse as medical imaging, seismic

research by the petroleum industry, and the manufacture of computer

chips and desktop printers.

Flea Market

- AUTOS & SUPPLIES

- ‘95 TOYOTA PICKUP, 4 cyl, 5 sp, 240K mi, new clutch, $2,800, Rob, X7322, (707) 642-8847

HOUSING-

BERKELEY, 2 bdrm/1bth fully furn home for rent, family friendly

neighborhood, close to N. Berkeley BART/stores/restaurants/I-80

/UC Berkeley, $1,800/mo, avail 4/1, Julianna, (636) 279-2070, [email protected]

NORTH OAKLAND, spacious 2 bdrm cottage, hrdwd flrs, quiet, tree lined, resid street, w&d, lots of light & privacy, $1,350/mo, 1st + last + $1,000 dep, 1-yr lease, avail 2/14, Katherine, 697-1860, [email protected]

NORTH OAKLAND, spacious 1 bdrm upper flat in Victorian duplex, bay windows, lots of light, berber carpet & tile, w&d, privacy, quiet, tree lined, resid street, $1,050/mo, 1st + last + $1,000 dep, Katherine, 697-1860, [email protected]

BERKELEY HILLS, house in wooded neighborhood, furn, 3 bdrm/2 bths, lge kitchen, liv rm, din rm, study, priv yard, avail 3/1- 9/15/04, $2,100/mo, Gisela, 841-2066, [email protected].

NORTH BERKELEY HILLS, 1 bdrm, 1 person garden apt w/fp, off Grizzly Peak Blvd, 1blk to pub trans, nr lab & Tilden Park, avail 2/15, $950/mo incl util & laundry, no smoking/pets, 1st + last + sec $250, Barbara/Bill, 524-5780, 8 am to 9 pm

SAN PABLO, 2618 Tara Hills Drive, 3 bdrm/1 bth, lge family rm, nice backyard w/fruit trees, front yard, garage, parking space, w&d, $1,600/mo incl garbage, water & gardening, [email protected] , Ingrid, 524-1143

BERKELEY, HEARST COMMONS, 1146-1160 Hearst, studio townhouses w/ decks, hrdwd flrs, skylights, dw, ac, sec, wired for cable/sat, Ian, 548-1831, $795/mo, [email protected] for pictures

BERKELEY HILLS, walk to lab from this wonderful 2bdrm + house, fp, quiet w/ lge yrd, $1,900/ mo, Katie, 531 9325

SOUTH BERKELEY, 1 bdrm refurbished apt, unfurn, 7 unit bldg w/character, quiet, 15 min walk to campus, nr bus/shopping/park, util incl, $1,050/mo, Kathy, 482-1777, 326-7836, Guy, X4703

BERKELEY HILLS, perfect for visiting scholars by wk/mo, quiet furn suite, sleeps up to 3 in 2 bdrm/1bth, quiet, elegant , spacious, bay views, DSL, cable, microwave, walk to UCB, Denyse, 848-1830, [email protected]

OAKLAND HILLS 3+ bdrm/3bth, liv rm, fam rm, din rm, remodeled, view, short walk to Redwood Park, $2,000/mo, avail 4/1 to 8/15/04, [email protected], 531-3556

NORTH BERKELEY beautiful house, lovely spacious rm w/ spectacular bay views & bthrm, furn w/ bed/desk/dresser, incl kitchen/laundry privil, nr #67 bus, for a single person, non-smoker, responsible, clean & quiet, no pets, $500/mo incl util, Ms. Wurth, 527-5505 after 1:30 pm, [email protected].

BERKELEY, beautiful 1 bdrm/1bth home, close to UC/BART, great grocery shopping, brand new, high ceilings, lots of charm, newly rebuilt, double pane windows, security alarm, cable & internet ready, avail 1/25, $1,300/mo, Tony, 849-3146, [email protected]

NORTH BERKELEY, 1 bdrm upper flat, fully furn, nr Glendale & Campus Dr, carpeted, laundry, deck, fp, parking for 1 car, no pets/smoking, $1,100/mo + dep & util, avail 1/21, Rochelle, (415) 435-7539

BRIONES unincorp Martinez, charming small 2bdrm/1bth, 2 story cottage w/character, tile/carpet flrs, high knotty pine ceilings, partially furn, in woodsy park setting on a small horse ranch next Briones Park, mo to mo rent, $1,800 mo + partial util & dep, occas pet sitting credit, panoramic views, hiking & horse trails nr, priv gated road, secluded, quiet setting in the country, close to Orinda, Lafayette, Martinez & Pinole, nr freeways, 20-30 min drive to lab, Colette (925) 370-1292, 6-9 pm or weekends

BERKELEY avail immed, 2 bdrm/1bth home w/ garage, great neighborhood, close to BART/Solano Ave/shops/ restaurants, $1,950/mo + $3,000 sec, Kathy, 693-9213

BERKELEY HILLS, bdrm in quiet home, sep entr, own bthrm, 1/2 kitchen, $650/mo, Bill or Ada, 452 1580

NORTH BERKELEY, furn studio, avail 1/25, $750/mo, Rochelle, 883-0161

ALBANY, spacious 2/2 condo, Park Hill by Eucalyptus, fully furn, quiet & eleg, sleeps 4 in 3 bdrms, swim pool, exercise rm, recreation rm, 2 car garage, close to food market & El Cerrito Plaza, BART & public trans, busing to school, Denyse, 848-1830, [email protected]

ALBANY, 1 bdrm condo, 555 Pierce St, avail 2/15, unfurn, clean, new carpet, exc cond, all util paid, sec parking garage, wheelchair access, bay view, storage, heated pool, tennis court, gym, coin laundry on premises, 24-hr sec, refrig, stove, dw, 12 mo lease, $1,250/mo+ $1,500 sec dep, Mary, X4701, 816-9702

MISC FOR SALE-

BABY STROLLER & JOGGING STROLLER, used but in fairly good cond,

$50/bo, baby jogger II made by racing strollers w/16” wheels,

weighs only 20 lbs, $100/bo for baby jogger, Greg Stover, X7706,

527-7644

COMPUTER, Mac G3 desktop, 120 GB HD/768 MB RAM, $200; monitor 17” optiquest V95, $100; dig camera, Canon Elph 2 MP, $200; blue armchair, $20; coffee table, $30, Pierre or Margot, 524-8672

NORDIC TRAK ski machine, $50, Birgitta, 758-3230

PANASONIC TV 25” color CTG-2530, video & audio jacks, quartz tuning, modern wood cabinet, exc picture, exc cond, $75; TV/VCR cart, Bush V335, 2 shelves, 2 glass drs, 31”x21”x20”, $35; TV swivel base, 24”x15”, $13, Ron, X4410, 276-8079

PIANO FOOT STOOL for young children, handmade solid wood, adjustable, like new $50, Aurora, X6439, 799-2323

PILATES performer & videos for sale, one video shows setup/basic exercises, others for upper/lower body, $175, Antoinette, X5246

PRESSURE WASHER 2200 PSI @3GPM. gas powered, portable, made by Generac, like new, $150, Steve, X6598

WHEEL COVERS, two used 14-in, fit most cars, $2/ea, Nikon 35 mm auto camera, 35-70mm zoom w/ red eye red, $65/bo;basic phone w/cord, dig clock, message pad, $3; microwave , $35/bo; 4 small & 4 med plates, 1 lge plate, $10; set of 4 lge dinner plates, $8; mugs, container, spoons, forks, chopsticks, $3, Minmin, X5484, 847-5130

FREE- INFLATABLE 2-pers kayak, one slow leak, 2 sets of paddles, 2 flotation vests; modem 56 KBS; rolling cart, dark laminated wood, Pierre or Margot, 524-8672

VACATION-

SO LAKE TAHOE, spacious chalet in Tyrol area, close to Heavenly,

peek of lake from front porch, fully furn, sleeps 8, sunny deck,

pool & spa in club house, close to casinos & other attractions,

$150/day + $75 one-time clean fee, Angela, X7712 or Pat or Maria,

724-9450

PARIS, FRANCE, near Eiffel Tower, furn, eleg & sunny 2 bdrm/1 bth apt, avail yr round by week/mo, close to pub trans/shops, Geoff, 848-1830, [email protected]

LAKE TAHOE, 3 bdrm home, 2-1/2 bth, fenced yrd, quiet sunny location, skiing nearby great views of water & mountains, $195/night, 2 night min, Bob (925) 945-9345

Flea Market Policy

Ads are accepted only from Berkeley Lab employees, retirees, and onsite DOE personnel. Only items of your own personal property may be offered for sale.

Submissions must include name, affiliation, extension, and home phone. Ads must be submitted in writing

(e-mail: [email protected], fax: X6641,) or mailed/delivered to Bldg. 65.

Ads run one issue only unless resubmitted, and are repeated only as space permits. The submission deadline for the Feb. 6 issue is Friday, Jan. 30.